There are no items in your cart

Add More

Add More

| Item Details | Price | ||

|---|---|---|---|

Introduction

This article documents the implementation of a production-grade CI/CD pipeline on AWS using Terraform. The project demonstrates automated deployment of a containerised Node.js application using AWS ECS Fargate, with infrastructure fully managed as code.

Project Repository: https://github.com/Amitabh-DevOps/aws-devops

What is AWS DevOps?

AWS DevOps combines development and operations using Amazon Web Services. It enables:

Understanding CI/CD

Automatically building and testing code changes:

Automated deployment to production:

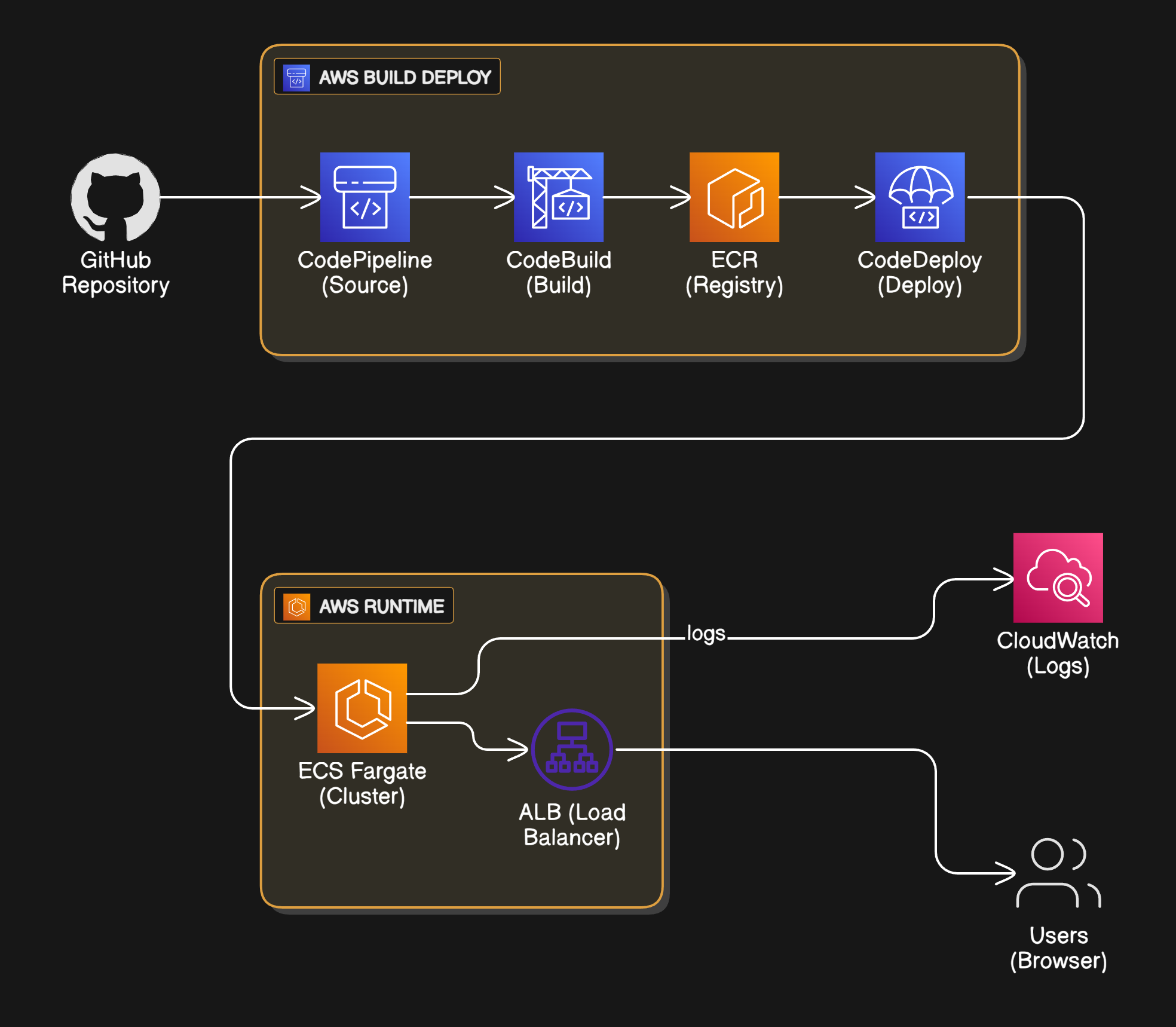

Project Architecture

How It Works

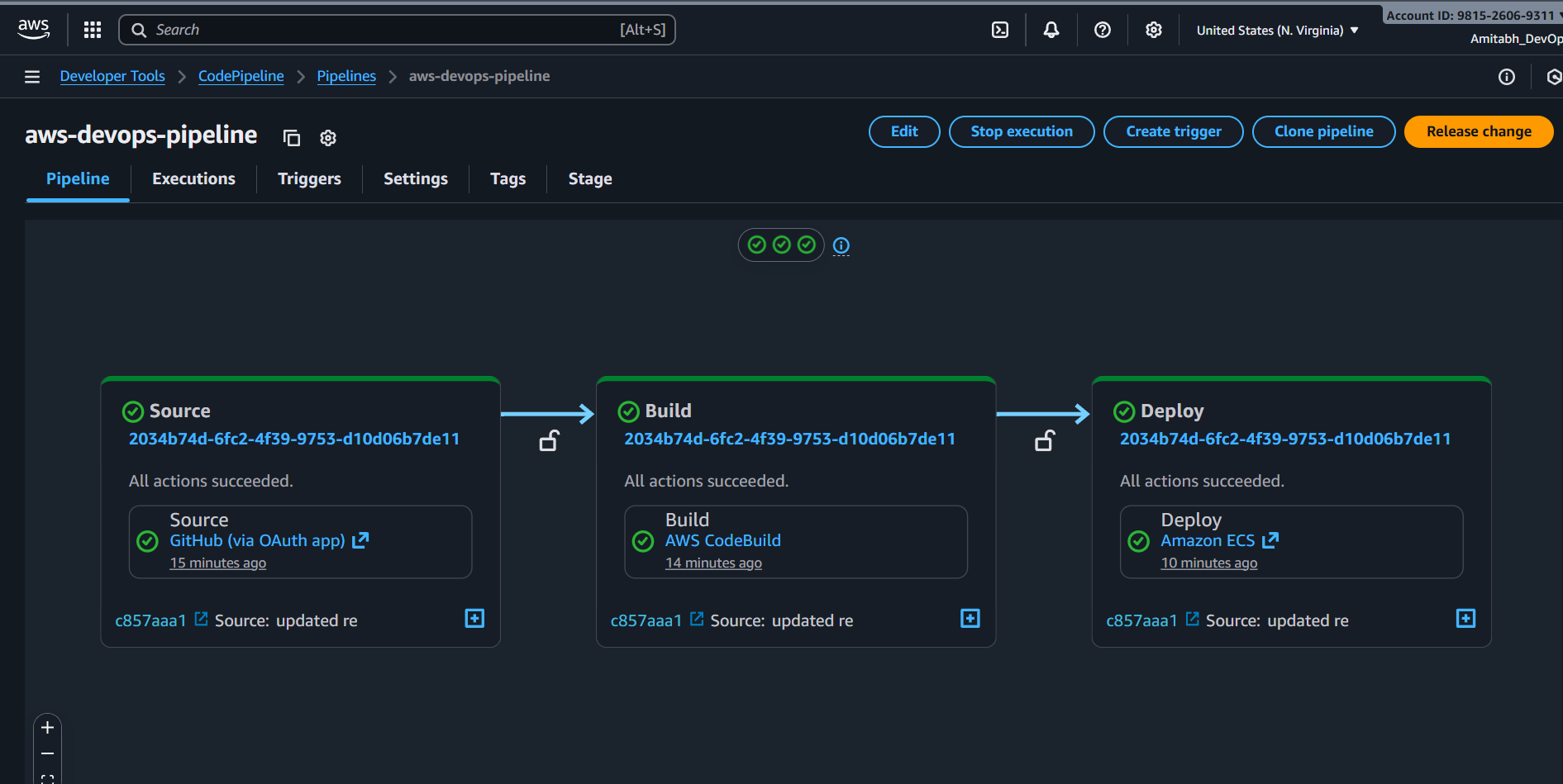

Developer → git push → GitHub → Webhook → CodePipeline CodeBuild executes buildspec.yml:

Pre-build:

Build:

Post-build:

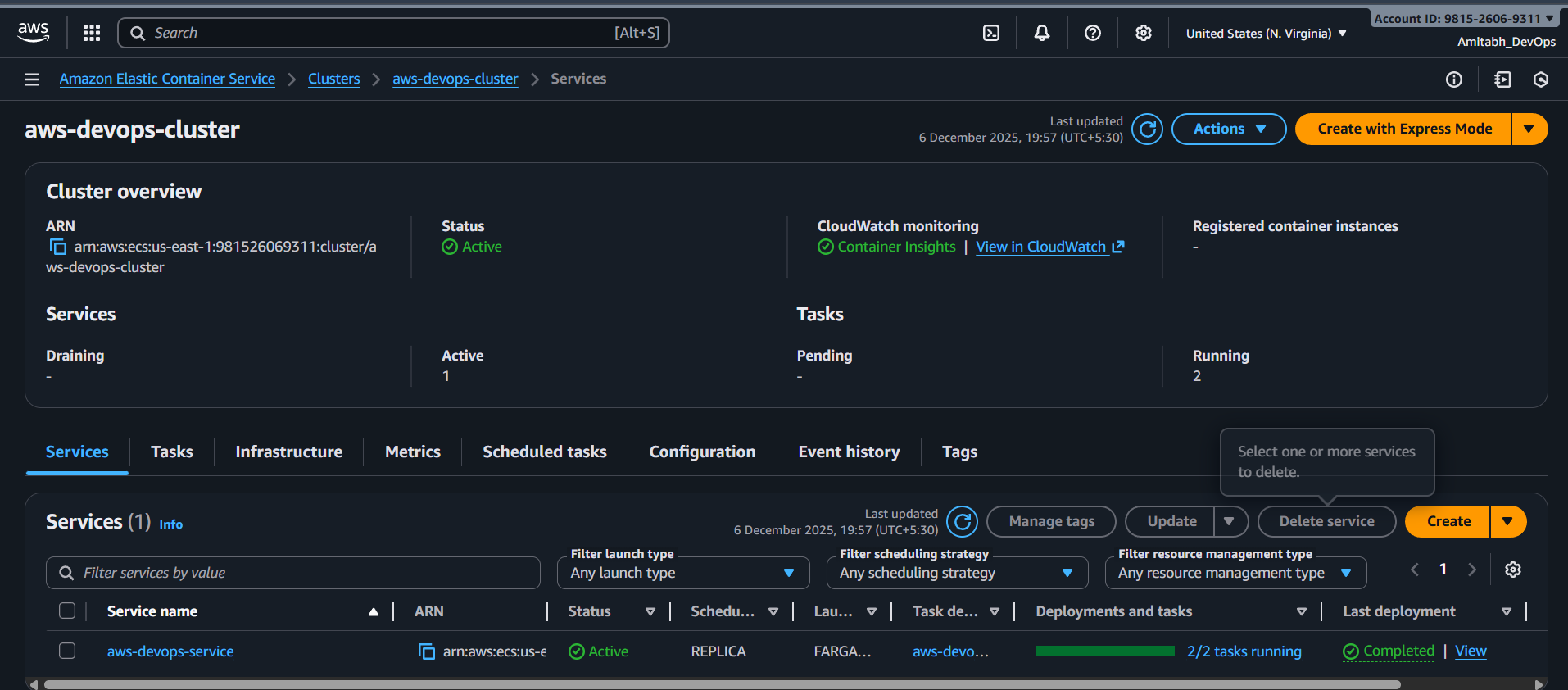

ECS rolling deployment:

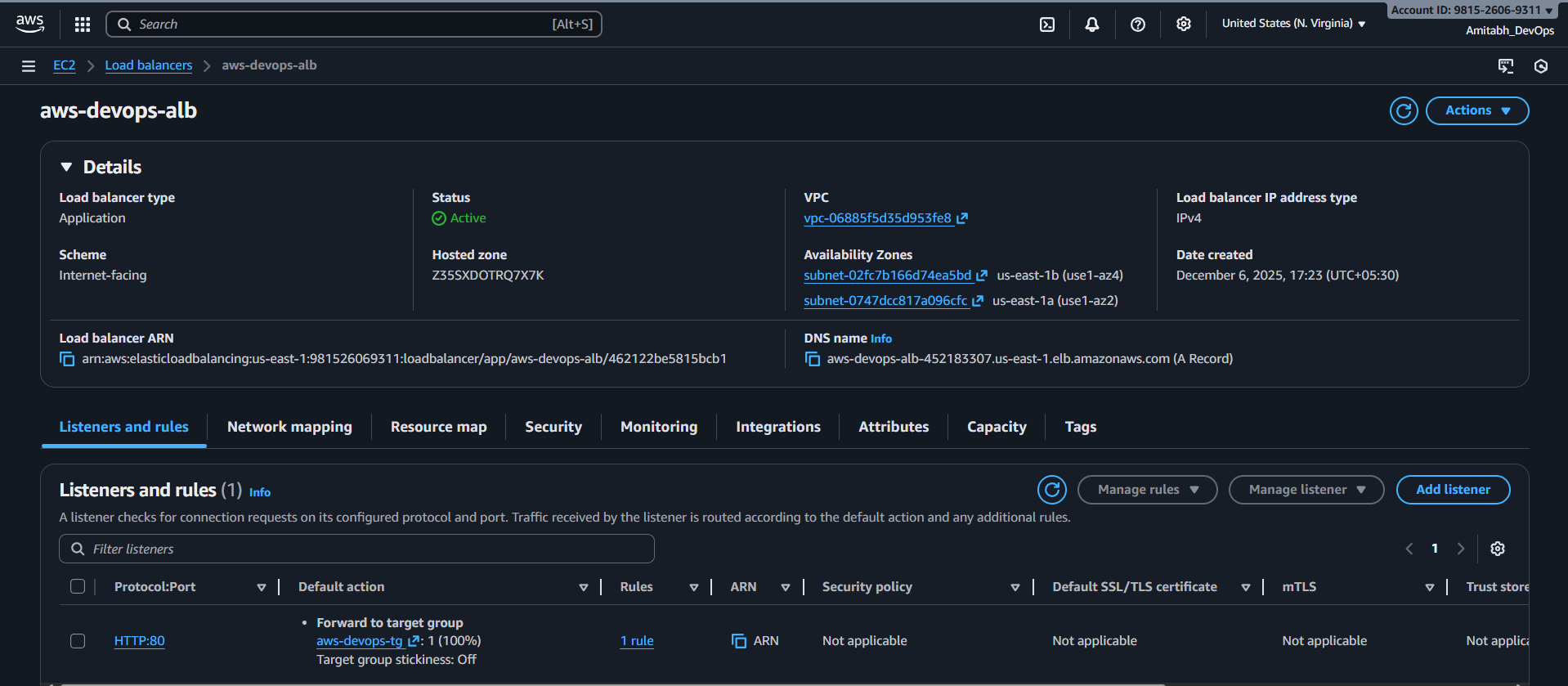

User → ALB (Port 80) → Target Group → ECS Tasks (Port 3000) Health checks run every 30 seconds. Unhealthy tasks are replaced automatically.

CPU-based: Target 70% utilization

Memory-based: Target 80% utilization

Range: 2-4 tasks

What is Terraform?

Terraform is an Infrastructure as Code (IaC) tool that defines cloud resources using declarative configuration files.

Declarative Syntax: Define desired state, Terraform determines steps to achieve it

State Management: Tracks all managed resources in terraform.tfstate

Plan and Apply:

terraform plan: Preview changesterraform apply: Execute changesterraform destroy : Remove resourcesResource Dependencies: Automatic determination of creation order

What is Terraform?

Terraform is an Infrastructure as Code (IaC) tool that defines cloud resources using declarative configuration files.

Declarative Syntax: Define desired state, Terraform determines steps to achieve it

State Management: Tracks all managed resources in terraform.tfstate

Plan and Apply:

terraform plan: Preview changesterraform apply: Execute changesterraform destroy : Remove resourcesResource Dependencies: Automatic determination of creation order

Terraform Files Explained

main.tf

Configures AWS provider and fetches data sources:

provider "aws" { region = var.aws_region}

data "aws_caller_identity" "current" {}data "aws_availability_zones" "available" {}aws_region: Default "us-east-1"project_name: Default "aws-devops"github_repo: Default "Amitabh-DevOps/aws-devops"container_cpu: Default 256container_memory: Default 512desired_count: Default 2vpc_cidr: Default "10.0.0.0/16"github_token: Create and Use your own

outputs.tf

Application Structure

The app/ directory contains:

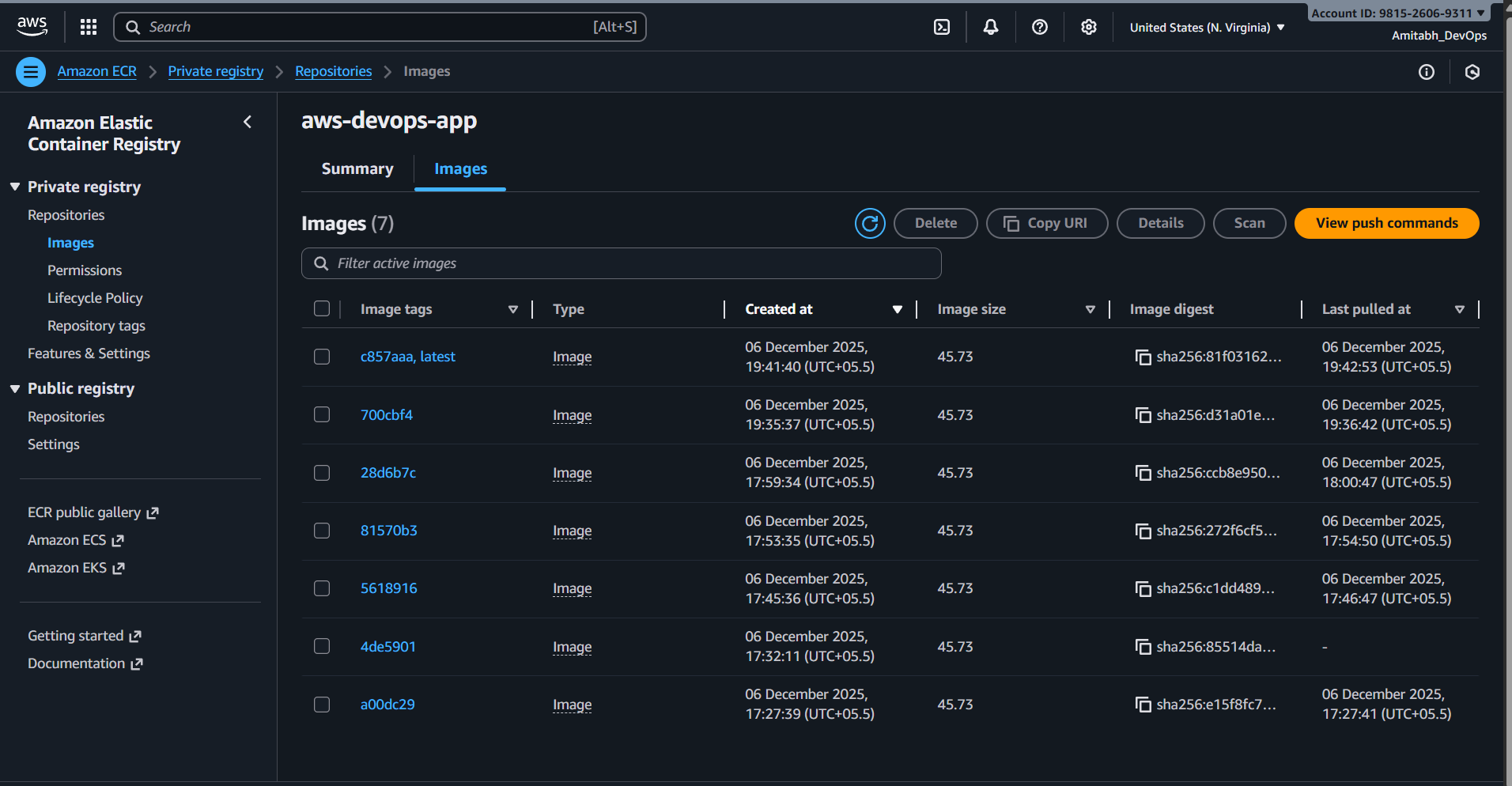

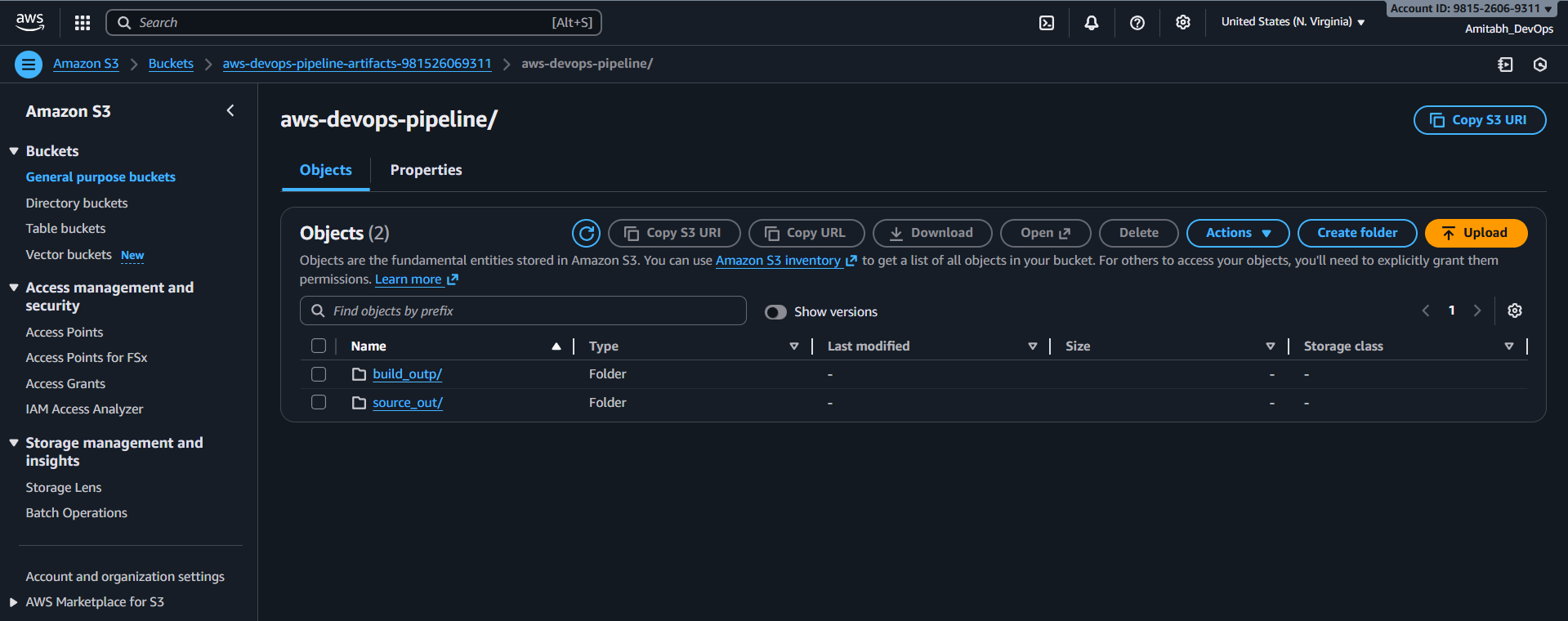

Deployment Flow

Initial Deployment

Deployment takes 5-10 minutes and creates 47 AWS resources.# navigate to terraform directorycd terraform# Copy the terraform.tfvars.example file into terraform.tfvars and replace with your actual variables.cp terraform.tfvars.example terraform.tfvars# Initialize Terraformterraform init# Validate configurationterraform validate# Preview changesterraform plan# Apply configurationterraform apply --auto-approve

Subsequent Deployments

Pipeline automatically:# Make code changesgit add .git commit -m "Update feature"git push origin main

Monitoring

CloudWatch Logs

/ecs/aws-devops: Application logs/aws/codebuild/aws-devops-build: Build logsContainer Insights

Enabled on the ECS cluster for:

Application: /health endpoint returns 200 OK

Container: Docker health check every 30s

Load Balancer: Target group health check every 30s

Destroy Infrastructure end-to-end using:

terraform destory --auto-approve Lessons Learned

Conclusion

This project demonstrates a production-ready CI/CD pipeline on AWS with:

Repository: https://github.com/Amitabh-DevOps/aws-devops

Happy Learning!